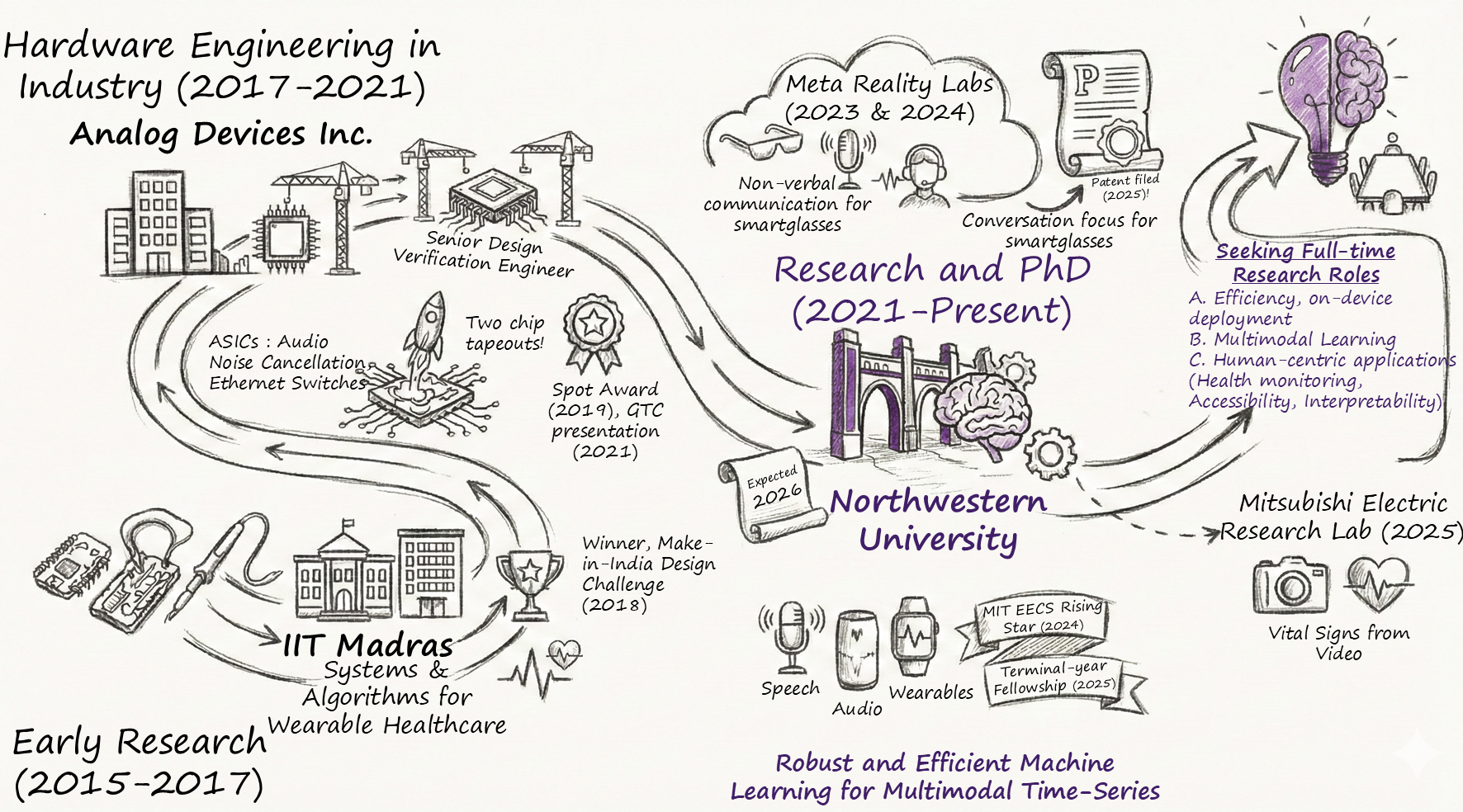

Hello! I am Payal (hear pronunciation). I am a final-year PhD candidate in Computer Engineering at Northwestern University, advised by Prof. Qi Zhu. I work on machine learning for time-series under practical constraints, with a focus on healthcare and audio applications. Previously, I have been a student researcher at Mitsubishi Electric Research Labs (Summer 2025) and at Meta Reality Labs (Summers 2024 and 2023). My current research focuses on:

1. Practical Multimodal Learning: I design efficient architectures for handling 10+ heterogeneous sensing modalities without combinatorial complexity. My work on MAESTRO (NeurIPS Spotlight 2025) introduces sparse adaptive cross-attention with symbolic tokenization, replacing expensive pairwise operations with scalable multimodal fusion. I showed that explicitly encoding missingness improves longitudinal signal monitoring for person identification, achieving top performance in the ICASSP’23 Signal Processing Grand Challenge. I also studied missingness in multimodal disfluency detection (InterSpeech’24), where opportunistically incorporating video when available enhanced performance.

2. Generalizable Time-Series Representations: I build models robust to distribution shifts from device changes and signal nonstationarity—common in real-world applications. I proposed a phase-anchored generalizable representation learning framework that is performant across a wide range of applications, from sleep stage classification using Electroencephalogram (EEG) to gesture recognition using Electromyography (EMG) (TMLR’25, TS4H@NeurIPS’25).

3. Human-Centric Applications: I develop ML methods for: a) Subjective Labels: I designed custom optimization objectives to monitor physical fatigue in manufacturing workers at Boeing and John Deere (PNAS Nexus’24) and multi-label objectives to model auditory attention with Meta Reality Labs. b) Data Constraints: I designed data distillation and self-supervised frameworks for disfluency detection (ICASSP’23) and achieved state-of-the-art silent speech understanding from surface EMG without paired audio using LLMs (ACL’25).

From 2017 to 2021, I was a Design Engineer at Analog Devices Inc., where I worked on the consumer electronics team and supported the tape-out of two chips. I earned my Master’s in Electrical Engineering from IIT Madras, India, where I designed an end-to-end cardiac wrist-wearable (sensor and on-device algorithms) robust to skin pigmentation variations. My research interests are a product of my broad system-level experience in consumer sensing devices, which helps me prioritize pragmatism while developing state-of-the-art machine learning methods for sensing applications.

I am seeking full-time research positions in industry and academia for Spring/Summer 2026. Please review my CV or contact me to discuss opportunities.

News

- Nov 2025 – Our paper TimeSliver: Symbolic-Linear Decomposition for Explainable Time-series Classification is accepted at ICLR 2026.

- Nov 2025 🌟 – Our paper Phase-driven Generalizable Representation Learning for Nonstationary Time Series Classification accepted at TMLR 2025 and TS4H Workshop at NeurIPS 2025 as a spotlight (top 9%).

- Oct 2025 – Received Terminal Year Fellowship at Northwestern University

- Oct 2025 – Invited talk at the Real-time Communications Conference and Expo at Illinois Institute of Technology

- Sept 2025 🌟 – Our paper MAESTRO: Adaptive Sparse Attention and Robust Learning for Multimodal Dynamic Time Series accepted at NeurIPS 2025 as a spotlight (top 3%)

- June 2025 – Interning with Mitsubishi Electric Labs (MERL), Boston, MA as a Research Scientist. Reach out if you are here and want to collaborate (or just catch up over coffee ☕)

- May 2025 – Our paper Can LLMs Understand Unvoiced Speech? Exploring EMG-to-Text Conversion with LLMs accepted at ACL Main Conference

- April 2025 – Speaker at CoDEX symposium, Northwestern University

- Dec 2024 🏃♀️ – Successfully passed my PhD Prospectus examination

- Oct 2024 📰 – Our paper Wearable Network for Multi-Level Physical Fatigue Prediction in Manufacturing Workers accepted in PNAS Nexus. Featured by Northwestern Engineering, PopSci, and other outlets

- Aug 2024 🏆 – Selected as EECS Rising Star 2024. Invited to MIT workshop (article)

- June 2024 – Started summer internship with Meta Reality Labs as a Research Scientist

- June 2024 – Our paper Missingness-resilient Video-enhanced Multimodal Disfluency Detection accepted for oral presentation at InterSpeech 2024

- Feb 2024 – Internship work with Meta Reality Labs on Non-verbal Hands-free Control for Smart Glasses using Teeth Clicks now live

- Oct 2023 – Collaborating with Meta Reality Labs Audio Research group as part-time student researcher

- July 2023 – Our paper Effect of Attention and Self-Supervised Speech Embeddings on Non-Semantic Speech Tasks accepted at ACM Multimedia 2023 Grand Challenges Track

- June 2023 – Interning with Meta Reality Labs, Redmond, WA as a Research Scientist. Reach out if you are here and want to collaborate (or just catch up over coffee ☕)

- Feb 2023 – Our paper Efficient Stuttering Event Detection using Siamese Networks accepted at ICASSP 2023

- Feb 2023 – Secured third place in e-Prevention Challenge at ICASSP 2023. Invited to present Person Identification with Wearable Sensing using Missing Feature Encoding and Multi-Stage Modality Fusion

Selected Papers

Selected Recognition

Terminal Year Fellowship, Northwestern University (2025)

Invited Speaker, IEEE Real-Time Communication Conference, Illinois Institute of Technology (2025) 📰 RTC Profile 🎥 Talk Video 📋 Slides

Speaker, CoDEX Symposium, Northwestern University (2024) 📰 Featured Article 🎥 Talk Video

EECS Rising Star, MIT Workshop (2024) 📰 MIT Profile 📰 Northwestern News 🎥 Talk Video

Worker Fatigue Research published in PNAS Nexus was highlighted in several media outlets (2024) Popular Science TechXplore MSN News-Medical Yahoo Tech Northwestern

Top Performer, Computational Paralinguistics Challenge (ComParE), ACM Multimedia (2023)

Top Performer, ICASSP Signal Processing Grand Challenge (2023)

Third Place, e-Prevention Challenge, ICASSP (2023)

Best Research Video, Design Automation Conference Young Fellowship (2021)

Winner, Make-in-India Anveshan Design Challenge, Analog Devices Inc. (2018) 📰 ADI Fellowship India Today